Kubernetes

쿠버네티스는 컨테이너화된 워크로드와 서비스를 관리하기 위한 이식성이 있고, 확장가능한 오픈소스 플랫폼이다. 쿠버네티스는 선언적 구성과 자동화를 모두 용이하게 해준다. 쿠버네티스는 크고, 빠르게 성장하는 생태계를 가지고 있다. 쿠버네티스 서비스, 기술 지원 및 도구는 어디서나 쉽게 이용할 수 있다.

쿠버네티스란 명칭은 키잡이(helmsman)나 파일럿을 뜻하는 그리스어에서 유래했다. K8s라는 표기는 "K"와 "s"와 그 사이에 있는 8글자를 나타내는 약식 표기이다. 구글이 2014년에 쿠버네티스 프로젝트를 오픈소스화했다. 쿠버네티스는 프로덕션 워크로드를 대규모로 운영하는 15년 이상의 구글 경험과 커뮤니티의 최고의 아이디어와 적용 사례가 결합되어 있다.

- Docker

- Master / Slave 공통

- Master ROOT user only

- Slave Node

- Dashboard

- Ingress

- Private Docker Registry

- 기타 Kubernetes command

- Istio Kiali

- 인증서 교체

- Containerd 명령어

Docker

0. Docker

1. sudo apt-get remove docker docker-engine docker.io containerd runc

2. sudo apt-get update

3. sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

4. Add Docker’s official GPG key

: curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

--> curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/docker.gpg 이거로 바뀐듯

5. sudo apt-key fingerprint 0EBFCD88

smend@smend-Slave:~$ sudo apt-key fingerprint 0EBFCD88

pub rsa4096 2017-02-22 [SCEA]

9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88

uid [ unknown] Docker Release (CE deb) <docker@docker.com>

sub rsa4096 2017-02-22 [S]

6.

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

* --> Raspberry Pi 4 : deb [arch=armhf] https://download.docker.com/linux/raspbian buster stable

7. sudo apt-get update

8. sudo apt-get install docker-ce docker-ce-cli containerd.io

9. sudo usermod -aG docker $USER 그리고 재로그인

-

Docker 치트 시트 : https://medium.com/@kam6512/%EB%AC%B4%EC%9E%91%EC%A0%95-%ED%95%B4%EB%B3%B4%EB%8A%94-docker-29fa19d8acbc

- docker image prune -a 모두 지우기

- docker image prune -f 안쓰는 거 지우기

- docker(tomcat) 실행

docker run -it -v /data/tomcat:/data/tomcat --name=python-test tomcat-meta-batch:1 /bin/bash docker run -d -i -t --name metafilemanager -p 8080:8080 tomcat-meta:0.1 01cb13fd8e1090692aff249b79e9ed77bd75f80071c0b6ecb2023cbaeb791dca - Docker image에 들어가기

docker exec -it c6b29002575e /bin/bash

- Kubernetes pod에 들어가기

kubectl exec -it -n meta-apps meta-meta-59b56f6694-smtmq -- bash - 도커 이미지 등록

docker commit -m "inital commit" -a "tomcat8_meta_image" tomcat8_meta tomcat8:0.1

-

생성한 image를 파일로 만드는 방법

- 외부로 image 파일을 보내고 싶을때 사용 합니다.

- docker 내부의 image를 외부 파일 image로 생성 방법

- 이미지를 묶은 파일로 생성 할 뿐 압축은 하지 않습니다. 별로도 압축 필요

- #docker save -o {image 파일 이름}.tar {image 명}

- 파일 image를 docker로 load하는 방법

- 상기 1)에서 만든 파일을 docker 내부로 load 합니다.

- #docker load -i {image 파일 이름}.tar

- docker 내부의 image를 외부 파일 image로 생성 방법

- 외부로 image 파일을 보내고 싶을때 사용 합니다.

Master / Slave 공통

Master / Slave 공통

-

sudo su - curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - cat <<EOF > /etc/apt/sources.list.d/kubernetes.list deb http://apt.kubernetes.io/ kubernetes-xenial main EOF apt-get update apt install kubelet kubeadm kubectl kubernetes-cni -y

- swap 제거 (master/worker 공통, root user)

sudo su - swapoff -a vi /etc/fstab # swap에 해당하는 영역을 #로 커맨트

- 마지막에 Private Docker Registry push를 위해서 도메인을 등록한다. ip는 http 예외로할 수 없어 어쩔 수 없이 등록

내부 DNS가 있으면 내부 도메인으로 하면 되지만 우리집은 DNS 서버가 없기 때문에 임의 등록

Private Docker Registry

sudo vi /etc/hosts 에 192.168.0.100 web.joang.com 등록

Master ROOT user only

Master ROOT user only

-

sudo kubeadm init --pod-network-cidr=172.16.0.0/16 --apiserver-advertise-address=192.168.0.100 ... kubeadm join 10.0.1.2:6443 --token nnnnnnnnnnn --discovery-token-ca-cert-hash sha256:nnnnnnnnn <-- 잘 복사할것 ... - 예시

... ... Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.0.100:6443 --token 2bnj8x.t4odyls0snm1bq8b \ --discovery-token-ca-cert-hash sha256:a51bfb3121d40d300e0eb5399610511852597bfb3a5391db37d535cce339f2f8

20231010 결과

kubeadm join 192.168.0.130:6443 --token zf4ub4.aa659burc1jlp42u \

--discovery-token-ca-cert-hash sha256:dfb5baf24d4e8eec7f9e54a8ce5a376688d8a9d5222496fa0eb0e1c845a22eb4- 아래와 같이 오류가 날 때

hyunsu@3-kubemaster:~$ sudo kubeadm init --pod-network-cidr=172.16.0.0/16 --apiserver-advertise-address=192.168.0.200 [init] Using Kubernetes version: v1.28.2 [preflight] Running pre-flight checks error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR CRI]: container runtime is not running: output: time="2023-10-04T14:44:26Z" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService" , error: exit status 1 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` To see the stack trace of this error execute with --v=5 or highersudo rm /etc/containerd/config.toml sudo systemctl restart containerd sudo kubeadm init --pod-network-cidr=172.16.0.0/16 --apiserver-advertise-address=192.168.0.100 - api 서버가 자꾸 죽을 때 : https://jh-labs.tistory.com/476 , 쿠버네티스 1.24 버전 부터는 설치시 다음과 같은 작업이 추가로 필요합니다.

# containerd의 기본설정 정의 sudo mkdir -p /etc/containerd sudo containerd config default | sudo tee /etc/containerd/config.toml # containerd enabled 여부 확인 systemctl is-enabled containerd # disabled일 경우 systemctl enable containerd # config 수정 sudo vi /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] # SystemdCgroup = false 기본 false로 되어있는 부분을 true로 변경한다. SystemdCgroup = true # containerd 재시작 sudo systemctl restart containerdhttps://no-easy-dev.tistory.com/5

- Master 일반 유저

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=$HOME/.kube/config export KUBECONFIG=$HOME/.kube/config | tee -a ~/.bashrc

- Master calico를 사용 일반 유저

kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

-

잘모르겠다 이부분 export kubever=$(kubectl version | base64 | tr -d '\n') kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$kubever"

-

Slave Node

Slave Node

-

sudo su - # master 설치시에 나온 명령을 그대로 복붙 kubeadm join 10.0.1.2:6443 --token nnnnnnnnnnn --discovery-token-ca-cert-hash sha256:nnnnnnnnn

- Slave Node에서 상기한 토큰값 알아내는 방법 Master Node에서 확인한다.

2023년 10월 12일 새벽 ㅠ,.ㅠ##### MASTER NODE에서 알아네기 ###### hyunsu@3-kubemaster:~$ kubeadm token list hyunsu@3-kubemaster:~$ kubeadm token create 0eyij7.i07r6rpce1b1qx19 hyunsu@3-kubemaster:~$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' d5813c73d45715c89ff3a43e4a9dc09a7d54ff317b1ed77c1b5c6be873d571f5 hyunsu@3-kubemaster:~$ ##### SLAVE에서 JOIN 하기 ##### 위의 값으로 slave에서 join sudo kubeadm join 192.168.0.200:6443 --token 0eyij7.i07r6rpce1b1qx19 --discovery-token-ca-cert-hash sha256:d5813c73d45715c89ff3a43e4a9dc09a7d54ff317b1ed77c1b5c6be873d571f5 --v=5 - sudo kubeadm join 192.168.0.200:6443 --token b753ql.bety9l2x2d3aeoov --discovery-token-ca-cert-hash sha256:aa57552f65900a0886792 f973075885e5d1675596742c7cc28349ef6c3c1a458

- ubuntu 22.04에 설치 시에 쿠버네티스 1.24 버전 부터는 설치시 다음과 같은 작업이 추가로 필요합니다.

# containerd의 기본설정 정의 sudo mkdir -p /etc/containerd sudo containerd config default | sudo tee /etc/containerd/config.toml # containerd enabled 여부 확인 systemctl is-enabled containerd # disabled일 경우 systemctl enable containerd # config 수정 sudo vi /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] # SystemdCgroup = false 기본 false로 되어있는 부분을 true로 변경한다. SystemdCgroup = true # containerd 재시작 sudo systemctl restart containerd - 초기화

sudo kubeadm reset cleanup-node - 예시

kubeadm token list <--를 하여서 토큰을 확인 , 만료일이 있어 없어짐 kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS o7g1vf.af2igg8kb05thqjx 23h 2019-10-04T21:38:34+09:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token 없으면 아래와 같이 생성한다. kubeadm token create <-- 실행하면 토큰 값이 보임 다음으로 Hash 를 만든다. openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 이제 그 두 값을 기준으로 클라이언트에서 join을 한다. sudo kubeadm join 192.168.0.100:6443 --token 2bnj8x.t4odyls0snm1bq8b \ --discovery-token-ca-cert-hash sha256:a51bfb3121d40d300e0eb5399610511852597bfb3a5391db37d535cce339f2f8

Dashboard

Dashboard

-

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

- TEST

kubectl proxy --> http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/.

- User Account

cat <<EOF | kubectl apply -f - apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard EOF cat <<EOF | kubectl apply -f - apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard EOF

- 인증서

# mkdir ~/certs # cd ~/certs # openssl genrsa -out dashboard.key 2048 # openssl rsa -in dashboard.key -out dashboard.key # openssl req -sha256 -new -key dashboard.key -out dashboard.csr -subj '/CN=localhost' # openssl x509 -req -sha256 -days 365 -in dashboard.csr -signkey dashboard.key -out dashboard.crt

- Recommended setup

# kubectl create secret generic kubernetes-dashboard-certs --from-file=$HOME/certs -n kube-system # kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

- 20231023 - 안만들어진 경우

hyunsu@3-kubemaster:~$ kubectl -n kubernetes-dashboard create token admin-user eyJhbGciOiJSUzI1NiIsImtpZCI6IlVPZF9GTEpzakhRMGtCOUM4VV9oMWt0aG9VVXVvaW4zQk1wZE1RbEdfTVEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjk2NTA5NzYzLCJpYXQiOjE2OTY1MDYxNjMsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiOTQ0OTFiMGEtZTI5YS00ZTU5LWJmOTMtM2VmZWFjYTFhMmMzIn19LCJuYmYiOjE2OTY1MDYxNjMsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.AiQRSv23cimjPMC8uNWy0M-Cgn5vcNdn32tl-gu3zuCjkzD4RwXxX3vhDMdDIjX_kYPsit0LM1HWxq9vBck_8Lh3WBk-mubD2CdUxmAyH63iGfC47V98Kg4aFRm3nmZU-kXaEG12MozTAqq-8_2G9p3A0ZR4TcJarnjY_waWKd-1kZRvPr_e5hJuI5_o31NAp6bWDQn9Izf28EWIWuzlkasR2vXY2PIpCPHCphiye01en03-dC5mN13fLCaPsuSqs3xx_lA8D4iMmaruSuu6tIDVtvmlFg8eCBApbBjyGdHxpRMqK3eT-XRI_hRpa1gn9gPhVdZWSTDoAHzWXY8vQA시크릿이 이제는 자동으로 만들어지지 않는 듯합니다.

상기한 토큰으로 로그인한 후에 해당 계정의 UID를 확인 후 아래 시크릿을 만들어야 합니다.

apiVersion: v1 kind: Secret type: kubernetes.io/service-account-token metadata: name: admin-user namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 43ecdad8-4e2f-4a6a-bd2f-de9a91cfc69bSecret을 만들고 사용자(admin-user)에 반영합니다.

- kubectl -n kubernetes-dashboard edit service kubernetes-dashboard

# Please edit the object below. Lines beginning with a '#' will be ignored, # and an empty file will abort the edit. If an error occurs while saving this file will be # reopened with the relevant failures. # apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}} creationTimestamp: "2020-09-26T14:01:45Z" labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard resourceVersion: "3356" selfLink: /api/v1/namespaces/kubernetes-dashboard/services/kubernetes-dashboard uid: f064c119-2560-42c2-aa2b-69302aa0866b spec: clusterIP: 10.97.63.192 ports: - port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: ClusterIP status: loadBalancer: {} ---> 아래와 같이 변경 !!! # Please edit the object below. Lines beginning with a '#' will be ignored, # and an empty file will abort the edit. If an error occurs while saving this file will be # reopened with the relevant failures. # apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}} creationTimestamp: "2020-09-26T14:01:45Z" labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard resourceVersion: "3356" selfLink: /api/v1/namespaces/kubernetes-dashboard/services/kubernetes-dashboard uid: f064c119-2560-42c2-aa2b-69302aa0866b spec: clusterIP: 10.97.63.192 ports: - nodePort: 31055 port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort status: loadBalancer: {} - Dashboard 접속정보 확인

# kubectl get service -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE calico-typha ClusterIP 10.109.234.181 <none> 5473/TCP 47m kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 51m kubernetes-dashboard NodePort 10.105.161.59 <none> 443:31055/TCP 35m

- Dashboard 계정 생성

# kubectl create serviceaccount cluster-admin-dashboard-sa # kubectl create clusterrolebinding cluster-admin-dashboard-sa --clusterrole=cluster-admin --serviceaccount=default:cluster-admin-dashboard-sa

- Dashboard 접속 시 필요한 계정 토큰 정보 확인

hyunsu@kubemaster:~$ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep cluster-admin-dashboard-sa | awk '{print $1}') Name: admin-user-token-wn487 Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: ad6f92c1-3ed1-4680-80da-4d9dcf8440e8 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1066 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkVEQnlPWER5ek85WjF0VlFScXNJRzZGOW1TMFJRN1lSNVRHcHlnZVdtb00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXduNDg3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhZDZmOTJjMS0zZWQxLTQ2ODAtODBkYS00ZDlkY2Y4NDQwZTgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.PINKyEp5yNQZv1HLvLHrP8GFUs9t3hI6OJ0EQtc7U_DhsNlBb6jMpvbPnKD7IlMJcECZrUZ6q6zlqYVbTLMuW89D-3_X-UDFlvymwfBfjWlH5AzY5h9oCtxhwA6fNljWgAEPhjJHr9ElWsSfIGWLShU_qxIX29gOcMCCZ1qSfQU8donNW-P7OtIYMfRbJe6VY5AqgDBDDFrXtd_Qc_Ne0EPdJpyRpVNpkrsCaZikue3zPqn6ORF-yVHWqulTqVU-gtg-eB0vA1WyYGgEc8lVImzyHogxJ3ysNblSxZt7LhVHB39ZdVr-dYh4UAEs-Iq8mi8OUT_eZjGLGCvR-zK6lg Name: default-token-9x79k Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: default kubernetes.io/service-account.uid: 9d2a8308-a11e-4183-aed2-be4aadcafabe Type: kubernetes.io/service-account-token Data ==== ca.crt: 1066 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkVEQnlPWER5ek85WjF0VlFScXNJRzZGOW1TMFJRN1lSNVRHcHlnZVdtb00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkZWZhdWx0LXRva2VuLTl4NzlrIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImRlZmF1bHQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5ZDJhODMwOC1hMTFlLTQxODMtYWVkMi1iZTRhYWRjYWZhYmUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6ZGVmYXVsdCJ9.0UnxF2W-f4jLxat0QJ4GdSBDaEopxNSQ-UfbWJ65gKVgoxidB1t_YucsqHy5dupmvQVQuXKs7arzuavtWYkQ4Z4nzYtqXP6fDGn6i8TeRM9G6lCvv5zJqkkFQDQjW4XGsgK4UgOzpkIaxgMq4nkckmjt316daJKZ1bP9LxAtbttN6vySh2dj4BrV---ZugIu9CCKOkpWi-fFdvH4ogjNMzT6mXEUkOgJ_lJOXDq7pCbOEStBL5UTF3ybir5io3D5HYSyBH4s29tJqcoJA2pkVuccjPsQbeOfwSs-GD97v4YucxIAZEJEy1UEAelYd5nZFBBl-rTp490NFvun5hr5Ng Name: kubernetes-dashboard-certs Namespace: kubernetes-dashboard Labels: k8s-app=kubernetes-dashboard Annotations: <none> Type: Opaque Data ==== Name: kubernetes-dashboard-csrf Namespace: kubernetes-dashboard Labels: k8s-app=kubernetes-dashboard Annotations: <none> Type: Opaque Data ==== csrf: 256 bytes Name: kubernetes-dashboard-key-holder Namespace: kubernetes-dashboard Labels: k8s-app=kubernetes-dashboard Annotations: <none> Type: Opaque Data ==== priv: 1675 bytes pub: 459 bytes Name: kubernetes-dashboard-token-glnd4 Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: 58104d32-8e7e-4b4f-9e24-e8aafe8ead9a Type: kubernetes.io/service-account-token Data ==== ca.crt: 1066 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkVEQnlPWER5ek85WjF0VlFScXNJRzZGOW1TMFJRN1lSNVRHcHlnZVdtb00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1nbG5kNCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjU4MTA0ZDMyLThlN2UtNGI0Zi05ZTI0LWU4YWFmZThlYWQ5YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.gqPgpjTrxmUqFJZTWJKd_LC0YkqfgmNZTdxdFBpymShL0tK8JOI8qnynzO2oZWJtPiK2a8EE3HqTBn-WwJEzOWHWiCTZhLca6e4RDQ-Mw_7vaTwxwdcBmm8fcTcMdupRbZIOl6kSLg7JmgQGXVS0q7fTQKdiMqNfU2b4cKC8TDZ_-YcT3offch0kuT8mu5Dug_c-CFpFd6uKJ_ox-ZajppWLvIjLRppxvR5km9SzksnpbszRggC6jMJIGI4OlU8sglGpAtF4-GN6LZnfG4NVSrTDiPFWno0HMH8zoVJH10_FTU0eRUHeq9ST_GHfjoyGO45mU6H9FufainwavxXGNQ

- 접근 시 인증서 오류 문제

openssl pkcs12 -export -clcerts -inkey dashboard.key -in dashboard.crt -out dashboard.p12 -name "kubernetes-admin"

- Dash Board

- 참고

Ingress

Ingress

* INGRESS를 사용한 쿠버네티스 네트워크 설치 및 연결

- Apply the latest version of the NGINX Ingress Controller

- Ingress 튜토리얼

Private Docker Registry

Private Docker Registry

- Docker registry Images 가져오기

docker pull registry:latest

- docker images

- hyunsu@kubemaster:/etc/docker/registry$ sudo mv ./config.yml ./config.yml.20201022

-

config

version: 0.1 log: fields: service: registry storage: cache: blobdescriptor: redis filesystem: rootdirectory: /data/registry redis: addr: redis:6379 http: addr: :5000 headers: X-Content-Type-Options: [nosniff] health: storagedriver: enabled: true interval: 10s threshold: 3 -

docker run -d -p 9002:5000 -e REGISTRY_STORAGE_DELETE_ENABLED=true --restart=always --name JoangPrivateDocker -v /home/hyunsu/config.yml:/home/hyunsu/config.yml registry:2

: -e REGISTRY_STORAGE_DELETE_ENABLED=true 옵션은 이미지를 삭제를 할 수 있다는 의미로 아래 DELETE 명령이 동작한다.

안하면 {"errors":[{"code":"UNSUPPORTED","message":"The operation is unsupported."}]} 오류가 발생 - docker ps -a

- docker ps -l (최근)

- netstat -an | grep 9002 (확인)

Private Docker 사용

- docker build -t tomcat-meta:0.1 .

- docker tag tomcat-meta:0.1 web.joang.com:9002/tomcat-meta:0.1

- docker tag를 이용하여 docker registry에 push

systemctl restart dockercat /etc/docker/daemon.json { "insecure-registries" : ["192.168.0.130:9002"] } - docker push web.joang.com:9002/tomcat-meta:0.1

주의 : 기본적으로 https를 사용해야 하는데 https를 쓰는 걍우 push 에서 오류가 난다.

The push refers to repository [192.168.0.100:9002/tomcat-meta-batch]

Get https://192.168.0.100:9002/v2/: http: server gave HTTP response to HTTPS client

The push refers to repository [192.168.0.100:9002/tomcat-meta-batch]

Get https://192.168.0.100:9002/v2/: http: server gave HTTP response to HTTPS client

---> 따라서 보안을 http접근을 하용 해야 한다.

sudo vi /etc/docker/daemon.json 를 아래와 같이 수정

{

"insecure-registries" : ["web.joang.com:9002"]

}

IP로 하니까 오류 발생 !!!!!

127.0.1.1 web.joang.com 추가 !

127.0.0.1 localhost

127.0.1.1 kubemaster

127.0.1.1 web.joang.com

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

20231003 오류 발생

[Docker] private registry http: server gave HTTP response to HTTPS client 해결 방법

- 상기한 sudo vi /etc/docker/daemon.json 조치

- /etc/containerd/config.toml에 아래 내용 추가

... ... [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.configs."web.joang.com:9002".tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://registry-1.docker.io"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."web.joang.com:9002"] endpoint = ["http://web.joang.com:9002"] ... - 작업 순서

hyunsu@2-kworker1:/etc/containerd$ sudo vi /etc/hosts

: 192.168.0.130 reg.joang.com

hyunsu@2-kworker1:/etc/containerd$ sudo vi /etc/containerd/config.toml

hyunsu@2-kworker1:/etc/containerd$ sudo vi /etc/docker/daemon.json

hyunsu@2-kworker1:/etc/containerd$ sudo systemctl restart containerd

hyunsu@2-kworker1:/etc/containerd$ sudo systemctl restart docker - yaml에서 이미지 full 시 아래와 같이 표시

1. 리포지토리 조회

- Usage : curl -X GET <Repository URL/v2/_catalog>

http://web.joang.com:9002/v2/_catalog

2. 삭제할 리포지토리의 Tag 조회

- Usage : curl -X GET <Repository URL/v2/<repository 이름>/tag/list

http://web.joang.com:9002/v2/tomcat-meta/tags/list

3. content digest(hash) 조회(registry 컨테이너가 작동중인 노드에서 실행)

- Usage : curl -v --silent -H "Accept: application/vnd.docker.distribution.manifest.v2+json" -X GET <Repository URL>/v2/<Repository 이름>/manifests/<Tag> 2>&1 | grep Docker-Content-Digest | awk '{print ($3)}'

예) curl -v --silent -H "Accept: application/vnd.docker.distribution.manifest.v2+json" -X GET http://192.168.0.100:9002/v2/joang-mediawiki/manifests/1 2>&1 | grep Docker-Content-Digest | awk '{print ($3)}'

--> 결과 sha256:e9c342dfa34bf2c3cf58503db8bc9a1298e233fadfbd6551ecea83aca80d701a

예) curl -XGET -v -H "Accept: application/vnd.docker.distribution.manifest.v2+json" https://registry.hoya.com/v2/ubuntu/manifests/17.04

4. manifest 삭제

- Usage 1 : curl -X DELETE <Repository URL>/v2/<Repository 이름>/manifests/<content digest>

예) curl -X DELETE https://registry.hoya.com/v2/ubuntu/manifests/sha256:213e05583a7cb8756a3f998e6dd65204ddb6b4c128e2175dcdf174cdf1877459

5. GC(Garbage Collection) 실행 : Garbage 이미지 삭제

- Usage : docker exec -it registry bin/registry garbage-collect /etc/docker/registry/config.yml

docker exec -it JoangPrivateDocker registry garbage-collect /etc/docker/registry/config.yml

6. 레지스트리 서버 재시작

docker stop registry

docker start registry

예) Registry 파일시스템내에서 파일 삭제

shell> curl -X GET https://registry.hoya.com/v2/_catalog

{"repositories":["debian","ubuntu"]}

shell> curl -X GET https://registry.hoya.com/v2/ubuntu/tags/list

{"name":"ubuntu","tags":["17.04","18.04"]}

shell> curl -v --silent -H "Accept: application/vnd.docker.distribution.manifest.v2+json" -X GET https://registry.hoya.com/v2/ubuntu/manifests/17.04 2>&1 | grep Docker-Content-Digest | awk '{print ($3)}'

sha256:213e05583a7cb8756a3f998e6dd65204ddb6b4c128e2175dcdf174cdf1877459

shell> docker exec -it registry sh => registry container에 shell로 접속

/ # cd /var/lib/registry/docker/registry/v2

/var/lib/registry/docker/registry/v2 # rm -rf ./repositories/ubuntu/_manifests/tags/17.04

/var/lib/registry/docker/registry/v2 # rm -rf ./repositories/ubuntu/_manifests/revisions/sha256/<content-digest>

shell> docker exec -it registry bin/registry garbage-collect /etc/docker/registry/config.yml

shell> docker stop registry

shell> docker start registry리포지토리 삭제

예시) ubuntu 리파지토리 삭제

shell> curl -X GET https://registry.hoya.com/v2/_catalog

{"repositories":["debian","ubuntu"]}

shell> docker exec -it registry sh => registry container에 shell로 접속

/ # cd /var/lib/registry/docker/registry/v2

/var/lib/registry/docker/registry/v2 # rm -rf ./repositories/ubuntu/ => 레파지토리 삭제

/var/lib/registry/docker/registry/v2 # exit

shell> docker exec -it registry bin/registry garbage-collect /etc/docker/registry/config.yml

shell> docker stop registry

shell> docker start registryTroubleShooting

증상 ) curl 명령어를 이용하여 삭제시 아래(붉은 글씨) 와 오류가 발생할 경우

| > DELETE /v2/ubuntu/manifests/sha256:e5dd9dbb37df5b731a6688fa49f4003359f6f126958............... > User-Agent: curl/7.29.0 > Host: registry.hoya.com > Accept: application/vnd.docker.distribution.manifest.v2+json > < HTTP/1.1 405 Method Not Allowed < Content-Type: application/json; charset=utf-8 < Docker-Distribution-Api-Version: registry/2.0 < X-Content-Type-Options: nosniff < Date: Thu, 02 Apr 2020 03:24:55 GMT < Content-Length: 78 < {"errors":[{"code":"UNSUPPORTED","message":"The operation is unsupported."}]} * Connection #0 to host registry.hoya.com left intact |

원인)

registry 시작시 환경변수 -e REGISTRY_STORAGE_DELETE_ENABLED=true 를 지정하지 않았을 경우 DELETE 메소드가 허용되지 않는다.

조치)

Registry 시작시 "-e REGISTRY_STORAGE_DELETE_ENABLED=true" 환경변수를 추가해서 서비스를 시작한다.

- TroubleShooting

증상) curl 명령어 실행시 아래와 같은 오류 발생

- 데비안, 우분투

| shell> curl -X GET https://registry.hoya.com/v2/_catalog curl: (60) SSL certificate problem: self signed certificate in certificate chain More details here: https://curl.haxx.se/docs/sslcerts.html curl failed to verify the legitimacy of the server and therefore could not establish a secure connection to it. To learn more about this situation and how to fix it, please visit the web page mentioned above. shell> |

- CentOS

| shell> curl -X GET https://registry.hoya.com/v2/_catalog curl: (60) Peer's certificate issuer has been marked as not trusted by the user. More details here: http://curl.haxx.se/docs/sslcerts.html curl performs SSL certificate verification by default, using a "bundle" of Certificate Authority (CA) public keys (CA certs). If the default bundle file isn't adequate, you can specify an alternate file using the --cacert option. If this HTTPS server uses a certificate signed by a CA represented in the bundle, the certificate verification probably failed due to a problem with the certificate (it might be expired, or the name might not match the domain name in the URL). If you'd like to turn off curl's verification of the certificate, use the -k (or --insecure) option. shell> |

원인)

registry 컨터이너가 사설 인증서를 사용해서 서비스를 할경우 curl 에서 인증서 오류 발생

조치)

1. 데비안, 우분투

| shell> cp rootca.crt /usr/local/share/ca-certificates shell> update-ca-certificates Updating certificates in /etc/ssl/certs... 1 added, 0 removed; done. Running hooks in /etc/ca-certificates/update.d... done. shell> |

2. CentOS

/etc/pki/ca-trust/source/anchors/ 디렉토리에 사설 rootca 인증서를 등록후 update-ca-trust명령어 실행

| shell> cp rootca.crt /etc/pki/ca-trust/source/anchors/ shell> update-ca-trust |

3. curl 명령어에 -k 또는 --inscure 옵션 사용

| shell> curl -k https://www.domain.com OR shell> curl --insecure https://www.domain.com |

Private image delete

-

curl -v --silent -H "Accept: application/vnd.docker.distribution.manifest.v2+json" -X GET http://192.168.56.3:9002/v2/tomcat-synapse/manifests/0.1 2>&1 | grep Docker-Content-Digest | awk '{print ($3)}'

-

curl -v --silent -H "Accept: application/vnd.docker.distribution.manifest.v2+json" -X DELETE http://192.168.56.3:9002/v2/tomcat-synapse/manifests/sha256:65336b7ee5a56dc2a7294c02fbb515542e5212a7ea193943160d9ecbb4ca0f62

- GC(Garbage Collection)

docker exec -it JoangPrivateDocker registry garbage-collect /etc/docker/registry/config.yml

- Image 정리

docker image prune -f

기타 Kubernetes command

기타 Kubernetes command

좀비pod가 있을 때 강제 삭제

- 서버 재시작 후에 좀비 발생으로 인한 서비스 중지 아래와 같이 강제로 삭제

* kubectl delete pod tomcat-meta-d665c8557-glcpp -n tomcat-apps --grace-period=0 --force

Namespace 삭제

- Namespace 삭제

* kubectl delete namespace tomcat-apps

reboot 시에 private registry 비정상 동작

- private registry container가 죽지 않고 있을 때

docker container ls -a | grep Joang 확인 docker container rm 1346f165e3b6 삭제 그리고 재시작 ... push 필요 . 근데 이거 재시작해도 자동실행 안되나 ?

Ingress path / only work

- /만 될 때

- nginx.ingress.kubernetes.io/use-regex: "true"

-

https://kubernetes.github.io/ingress-nginx/user-guide/ingress-path-matching/

Pod에 들어가기

kubectl exec -it -n meta-apps meta-meta-59b56f6694-smtmq -- bash

Pod 재시작

kubectl rollout restart ds -n kube-system weave-net

Istio Kiali

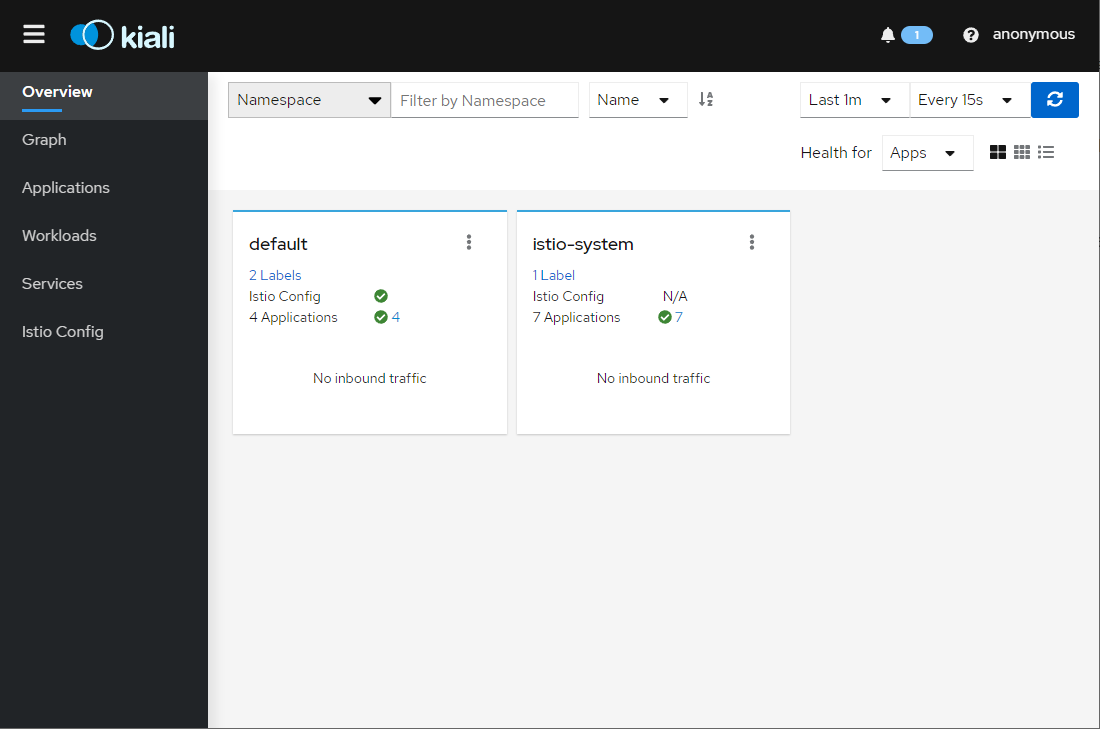

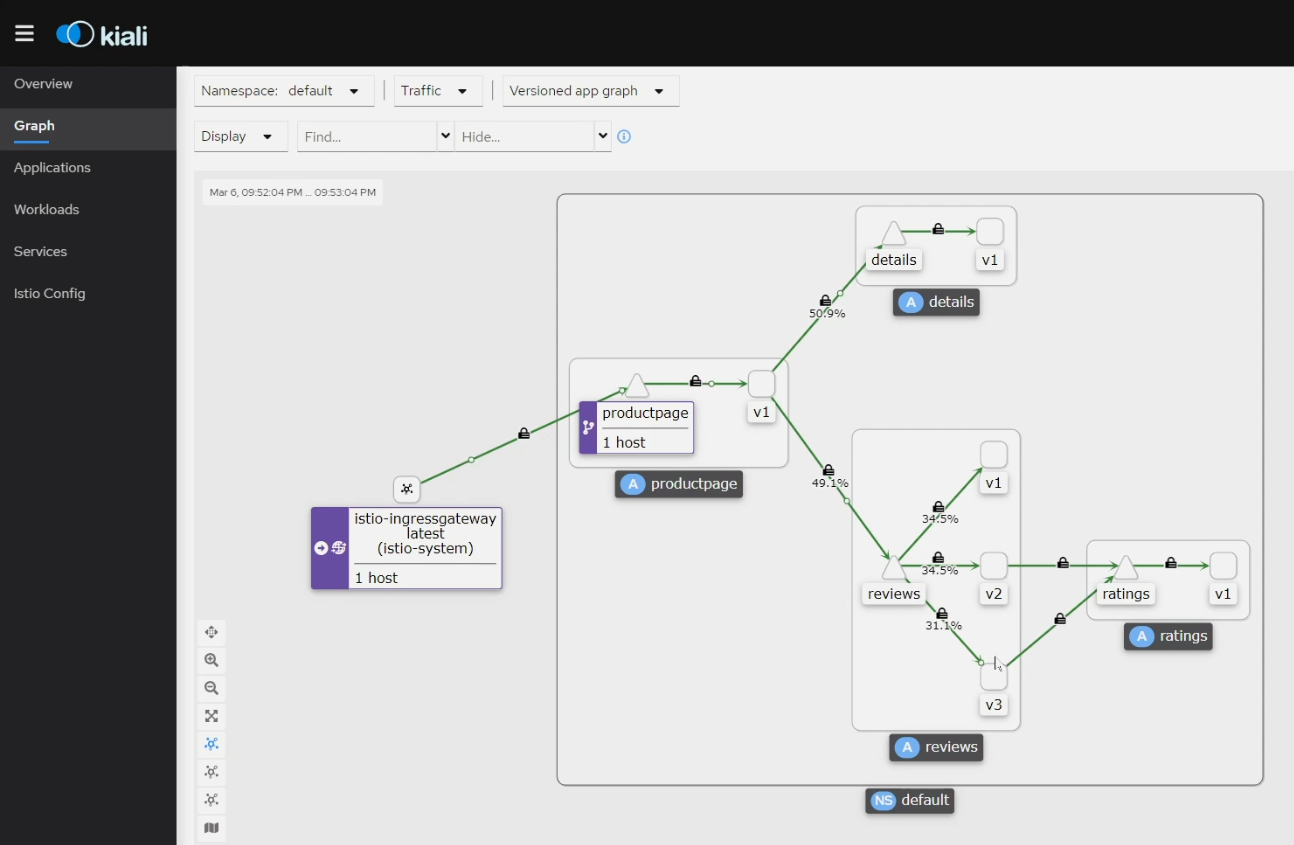

Kiali (Kubernetes add on for istio) 소개

출처 : 이종하님

-

Istio + Kiali :

Istio Install

Kiali Install 웹 대시보드 형태로 Istio 정책을 제어하고 Istio 동작을 확인할 수 있는 기능을 지원

https://isn-t.tistory.com/43 -

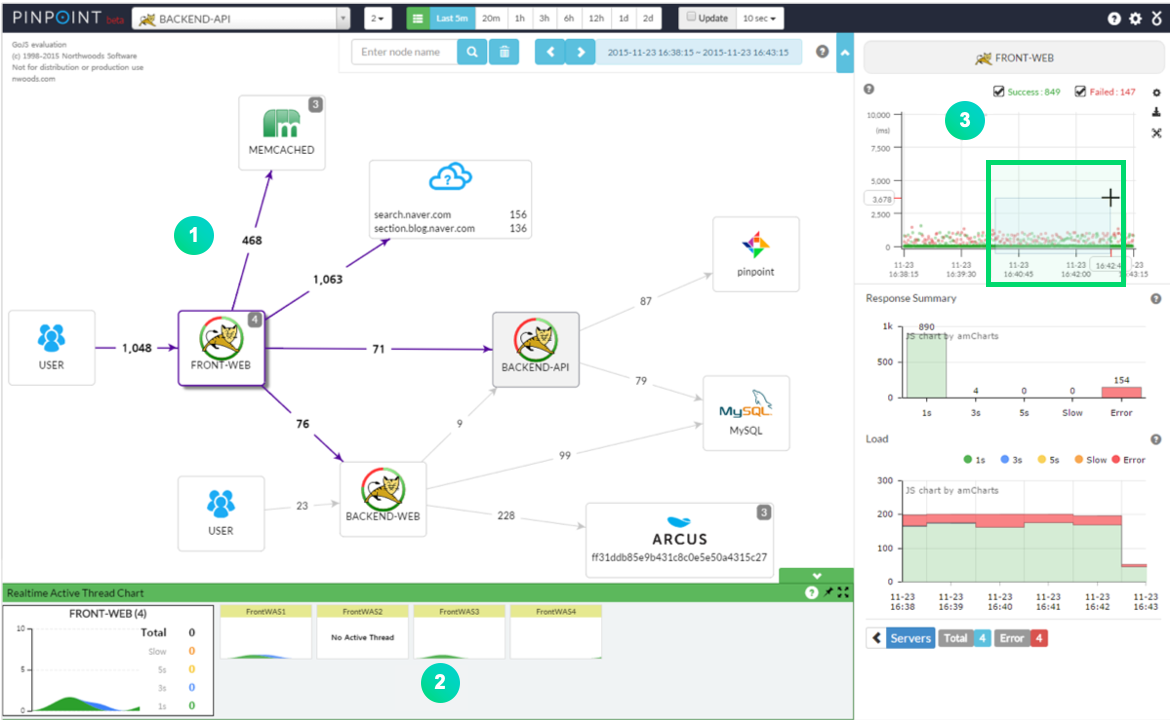

오픈 소스 APM Pinpoint 도입 및 후기 :

https://tech.trenbe.com/2022/02/22/pinpoint.html

https://pinpoint-apm.github.io/pinpoint/index.html

Pinpoint 소개

Pinpoint는 분산 서비스 및 시스템의 성능 분석/진단/추적 플랫폼 서비스로서 “N” 계층의 SOA(Service Oriented Architecture) 및 Micro-Service로 구성된 아키텍처 서비스의 추적 및 분석 기능을 제공하고, 분산 애플리케이션의 트랜잭션 분석, Topology Detection, Bytecode Instrumentation을 활용한 진단 기능을 제공

https://guide-fin.ncloud-docs.com/docs/pinpoint-pinpoint-1-1

Istio 설치 시 선택 profile

-

default: 디폴트 프로필은 IstioOperator API의 기본 설정에 따라 구성 요소를 활성화합니다. 이 프로필은 프로덕션 배포 및 멀티클러스터 메시의 주요 클러스터에 권장됩니다. 디폴트 설정을 확인하려면 istioctl profile dump 명령을 실행할 수 있습니다.

-

demo: 이 프로필은 Istio의 기능을 적은 자원 요구 사항으로 표시하기 위해 설계된 구성입니다. Bookinfo 애플리케이션과 관련 작업을 실행하는 데 적합합니다. 이 프로필은 높은 수준의 추적 및 액세스 로깅을 활성화하므로 성능 테스트에는 적합하지 않습니다.

-

minimal: 디폴트 프로필과 동일하지만 제어 플레인 구성 요소만 설치됩니다. 이를 통해 제어 플레인 및 데이터 플레인 구성 요소 (예: 게이트웨이)를 별도의 프로필을 사용하여 구성할 수 있습니다.

-

remote: 외부 제어 플레인 또는 멀티클러스터 메시의 주요 클러스터에서 관리되는 원격 클러스터를 구성하는 데 사용됩니다.

-

empty: 아무 것도 배포하지 않습니다. 사용자 정의 구성을 위한 기본 프로필로 유용할 수 있습니다.

-

preview: 프리뷰 프로필에는 실험적인 기능이 포함되어 있습니다. Istio에 새로운 기능을 탐색하기 위해 사용됩니다. 안정성, 보안 및 성능은 보장되지 않으므로 사용 시 주의가 필요합니다.

-

ambient: 앰비언트 프로필은 앰비언트 메시를 시작하는 데 도움을 주도록 설계되었습니다.

Kubernetes 버전에 따른 Istio 버전

| Version | Currently Supported | Release Date | End of Life | Supported Kubernetes Versions | Tested, but not supported |

|---|---|---|---|---|---|

| master | No, development only | 1.29, 1.30, 1.31, 1.32 | 1.23, 1.24, 1.25, 1.26, 1.27, 1.28 | ||

| 1.24 | Yes | November 7, 2024 | ~Aug 2025 (Expected) | 1.28, 1.29, 1.30, 1.31 | 1.23, 1.24, 1.25, 1.26, 1.27 |

| 1.23 | Yes | Aug 14, 2024 | ~May 2025 (Expected) | 1.27, 1.28, 1.29, 1.30 | 1.23, 1.24, 1.25, 1.26 |

| 1.22 | Yes | May 13, 2024 | ~Jan 2025 (Expected) | 1.27, 1.28, 1.29, 1.30 | 1.23, 1.24, 1.25, 1.26 |

| 1.21 | Yes | Mar 13, 2024 | Sept 27, 2024 | 1.26, 1.27, 1.28, 1.29 | 1.23, 1.24, 1.25 |

| 1.20 | No | Nov 14, 2023 | Jun 25, 2024 | 1.25, 1.26, 1.27, 1.28, 1.29 | 1.23, 1.24 |

| 1.19 | No | Sept 5, 2023 | Apr 24, 2024 | 1.25, 1.26, 1.27, 1.28 | 1.21, 1.22, 1.23, 1.24 |

| 1.18 | No | Jun 3, 2023 | Jan 4, 2024 | 1.24, 1.25, 1.26, 1.27 | 1.20, 1.21, 1.22, 1.23 |

| 1.17 | No | Feb 14, 2023 | Oct 27, 2023 | 1.23, 1.24, 1.25, 1.26 | 1.16, 1.17, 1.18, 1.19, 1.20, 1.21, 1.22 |

| 1.16 | No | Nov 15, 2022 | Jul 25, 2023 | 1.22, 1.23, 1.24, 1.25 | 1.16, 1.17, 1.18, 1.19, 1.20, 1.21 |

| 1.15 | No | Aug 31, 2022 | Apr 4, 2023 | 1.22, 1.23, 1.24, 1.25 | 1.16, 1.17, 1.18, 1.19, 1.20, 1.21 |

| 1.14 | No | May 24, 2022 | Dec 27, 2022 | 1.21, 1.22, 1.23, 1.24 | 1.16, 1.17, 1.18, 1.19, 1.20 |

| 1.13 | No | Feb 11, 2022 | Oct 12, 2022 | 1.20, 1.21, 1.22, 1.23 | 1.16, 1.17, 1.18, 1.19 |

The components marked as ✔ are installed within each profile:

| default | demo | minimal | remote | empty | preview | ambient | |

|---|---|---|---|---|---|---|---|

| Core components | |||||||

istio-egressgateway |

✔ | ||||||

istio-ingressgateway |

✔ | ✔ | ✔ | ||||

istiod |

✔ | ✔ | ✔ | ✔ | ✔ | ||

CNI |

✔ | ||||||

Ztunnel |

✔ |

To further customize Istio, a number of addon components can also be installed. Refer to integrations for more details.

Istio 설치

curl -L https://istio.io/downloadIstio | sh - <-- 받아서 확인하고 실행 필요

cd istio-1.9.1

export PATH=$PWD/bin:$PATH

istioctl profile list

istioctl install --set profile=demo -y

[hyunsu@2-RockyKubeMaster ~]$ istioctl install --set profile=default -y

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Installation complete Making this installation the default for injection and validation.

Thank you for installing Istio 1.17. Please take a few minutes to tell us about your install/upgrade experience! https://forms.gle/hMHGiwZHPU7UQRWe9

[hyunsu@2-RockyKubeMaster ~]$

kubectl label namespace showcase1-apps istio-injection=enabled

Kiali 설치

| Istio | Kiali Min | Kiali Max | Notes |

|---|---|---|---|

| 1.24 | 2.0.0 | ||

| 1.23 | 1.87.0 | 2.0.0 | Kiali v2 requires Kiali v1 non-default namespace management (i.e. accessible_namespaces) to migrate to Discovery Selectors. |

| 1.22 | 1.82.0 | 1.86.2 | Kiali v1.86 is the recommended minimum for Istio Ambient users. v1.22 is required starting with Kiali v1.86.1. |

| 1.21 | 1.79.0 | 1.81.0 | Istio 1.21 is out of support. |

| 1.20 | 1.76.0 | 1.78.0 | Istio 1.20 is out of support. |

| 1.19 | 1.72.0 | 1.75.0 | Istio 1.19 is out of support. |

| 1.18 | 1.67.0 | 1.73.0 | Istio 1.18 is out of support. |

| 1.17 | 1.63.2 | 1.66.1 | Istio 1.17 is out of support. Avoid 1.63.0,1.63.1 due to a regression. |

| 1.16 | 1.59.1 | 1.63.2 | Istio 1.16 is out of support. Avoid 1.62.0,1.63.0,1.63.1 due to a regression. |

| 1.15 | 1.55.1 | 1.59.0 | Istio 1.15 is out of support. |

| 1.14 | 1.50.0 | 1.54 | Istio 1.14 is out of support. |

| 1.13 | 1.45.1 | 1.49 | Istio 1.13 is out of support. |

| 1.12 | 1.42.0 | 1.44 | Istio 1.12 is out of support. |

| 1.11 | 1.38.1 | 1.41 | Istio 1.11 is out of support. |

| 1.10 | 1.34.1 | 1.37 | Istio 1.10 is out of support. |

| 1.9 | 1.29.1 | 1.33 | Istio 1.9 is out of support. |

| 1.8 | 1.26.0 | 1.28 | Istio 1.8 removes all support for mixer/telemetry V1, as does Kiali 1.26.0. Use earlier versions of Kiali for mixer support. |

| 1.7 | 1.22.1 | 1.25 | Istio 1.7 istioctl no longer installs Kiali. Use the Istio samples/addons for quick demo installs. Istio 1.7 is out of support. |

| 1.6 | 1.18.1 | 1.21 | Istio 1.6 introduces CRD and Config changes, Kiali 1.17 is recommended for Istio < 1.6. |

인증서 교체

인증서 교체

수동 인증서 교체

1. 인증서 일자 확인

$ kubeadm certs check-expiration2. 혹시모를 사태에 대비하여 인증서 백업

$ cp -r /etc/kubernetes ~/backups3. 인증서 갱신

$ kubeadm certs renew all5. kube-apiserver, kube-controller-manager, kube-scheduler재시작

$ kill -s SIGHUP $(pidof kube-apiserver)

$ kill -s SIGHUP $(pidof kube-controller-manager)

$ kill -s SIGHUP $(pidof kube-scheduler)

$ systemctl restart kubelet

$ systemctl daemon-reload

$ systemctl restart docker

( docker 재시작 이후엔 중지된 컨테이너들을 실행해줘야한다.)6. apiserver, controller-manager, scheduler 가 재실행되었는지 AGE 확인

$ kubectl get pods --all-namespaces -o wide7. 인증서 복사

$ cp /etc/kubernetes/admin.conf /root/.kube/config8. node 조회

$ kubectl get nodes

$ kubeadm certs check-expiration # 일자확인정상적으로 출력되면 끝!

Containerd 명령어

- sudo ctr -n k8s.io container list 콘테이너 리스트

- runtime endpoint와 image endpoint를 영구적으로 설정하는 명령어

sudo crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock --set image-endpoint=unix:///run/containerd/containerd.sock

--> sudo crictl ps - sudo crictl exec -it 12ddcc840f817 /bin/bash